As an SEO, you're often busy trying to get your content indexed and ranked by Google. But sometimes, you may want to remove pages. Removing pages may be needed for a couple of reasons:

- The page contained sensitive information that you don't want to be publicly available. After removing the sensitive content from the page, it may still show up in Google, and you want to remove that.

- Pages with dummy content or otherwise irrelevant content show up in the search results, possibly outranking more relevant pages.

- Thank you pages, order confirmation pages, password reset pages and other pages that may not be relevant for search engines.

Removing one or more pages from search engines is easy, but you need to follow a number of steps. This article tells you how it works.

Google Search Console's removal report

Google Search Console allows you to temporarily remove pages from its index. This is a first step and helps you quickly hide the page from the search results. Of course, you can only remove pages from Google from a site that you own, and for which you verified Search Console access.

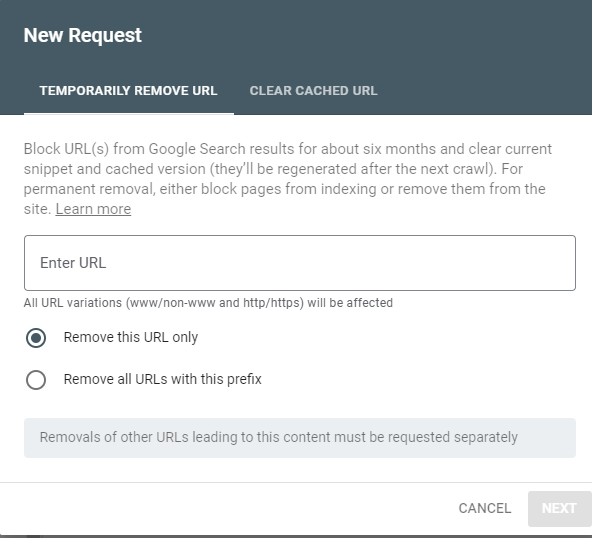

Log in to Search Console, select the right property and go to Removals. In the Temporary Removals tab, click the New Request button.

Enter the URL of the page you want to be removed. There's also the option to remove all URLs with this prefix. This is helpful to remove an entire section.

That's not it!

You're not done after this step! Google only hides the page for about 6 months. You need to make some changes to the page, to prevent it from showing up in Google again after that period.

Bing's Content Removal Tool

Don't forget about Bing! After verifying your site in Bing Webmaster Tools, use the Content Removal Tool to remove the page from the Bing index.

Similar to Google Search Console, you just provide the URL of the page, select Remove page, and click Submit. Unlike Google Search Console,

Bing won't let you remove entire folders, so removing an entire section from Bing may take a bit longer.

Setting no-index instructions on the page

To prevent the page from being indexed again, you'll need to add no-index instructions to the page. This instructs search engines to not index that page. Search engines normally follow that instruction.

There are two ways to add no-index instructions to a page, while still having the page available to your visitors:

No-index meta tag

Adding a noindex metatag to the page will stop search engines from indexing the page. Add this code to the head:

<meta name="robots" content="noindex">

Most content management systems also allow you to set noindex metatags. In Wordpress, you can use Yoast SEO to do this. Look for the option "Allow search engines to show this Post in search results?", and select no.

No-index HTTP header

Alternatively, you can set an X-Robots HTTP header. This should contain the following code:

X-Robots-Tag: noindex

You can do this using backend code or in the .htaccess file, and you probably need a developer to do this.

Alternative ways of blocking access to pages

If you don't want a page to be available to anyone (not just search engines), there are two ways to do that:

- Remove the page altogether, and show a 404 error page instead. Search engines will normally remove pages that no longer exist from their index.

- Password-protect the page, if you only want the page to be available to some people. As with a 404 page, search engines will remove password protected pages from their index.

No matter which method you choose to remove a page from search engines, it may take some time for the page to be removed. Google's and Bing's removal tools help you speed up that process.

To see if the page has been set to noindex, use our Noindex Check tool.

optional: Blocking the page in robots.txt

After adding the noindex instructions, you may want to instruct search engines to not ever crawl that page again. The robots.txt file can be used to block search engines like Google from crawling part of your site, or specific pages.

Important: is your page indexed already? Don't start with blocking the page in the robots.txt file. This will prevent Google from visiting the page. Any noindex instructions won't be picked up.

Only after the page has been removed, and the noindex instructions have been picked up, you could block it using the robots.txt file. It might save you some crawl budget, but is otherwise not needed.

In summary:

The right way to remove a page from Google and Bing:

- Request removal of the page in Google Search Console and Bing Webmaster Tools.

- Set a noindex metatag or HTTP header on the page that you want to exclude from Google's index

- (optional) only after the page is removed from the search index, change the robots.txt file, making sure that the page will not be crawled.

Note that even if you skip the last step, Google will follow the noindex instructions and not index your site. The only purpose of the last step is to save some crawl budget.