Google can’t rank your pages without indexing them first. Unfortunately, indexing isn’t always straightforward.

So today, we’ll show you how to fix the most common problems for all 20 Google coverage statuses.

What is the Google Coverage Status?

As you create and publish content, Google will crawl it and (hopefully) index it. The Coverage Status describes the status of your page in Google. Whenever an issue arises crawling or indexing the site, that will also be reflected in the Coverage Status. For most pages, the goal is to get submitted and indexed.

Where Can I Check the Google Coverage Status?

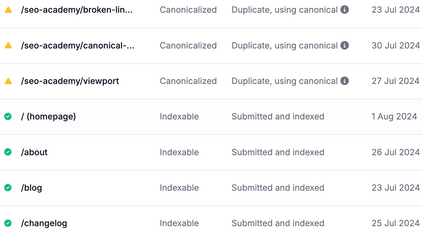

You can check the indexation status of your pages in the Google Search Console (“Pages,” previously ‘Coverage’). Use Google Search Console’s (GSC) indexation report for more advanced cases.

The fastest way is by using our Indexation report, which gets data straight from Google.

You’ll get notifications about indexing issues, SEO tasks, and website opportunities. We use Google’s new Page Inspect API to show each page’s coverage status.

The Best Google Coverage Status: Submitted and indexed

If your Google Search Console is displaying the “submitted and indexed” status, well done! You’re good to go.

Indexed, not submitted in sitemap

You’ll see the “Indexed, not submitted in sitemap” coverage status if your page URL was indexed, but it’s not in your sitemap.

Typically, this happens if you didn’t upload an xml sitemap, or if you added the sitemap only recently.

How to Fix the Indexed, not submitted in sitemap Google Coverage Issue

Create a sitemap and submit it to the Google Search Console. If you've done that, it may take a few days for Google to crawl the sitemap and update the status.

Discovered - currently not indexed

If Google crawled your URLs but hasn’t indexed them yet, there could be a few potential causes:

- You’re running out of crawl budget -> If your website has millions of pages, choose which ones you want Google to crawl and block the others in the robots.txt file.

- You have too many redirects -> Redirects waste Google bots’ time, so make sure your redirects are implemented correctly.

- Your website can’t handle the load -> If your website displays 5xx errors, Google bots will stop crawling it to avoid crashing the server. Check and discuss with your hosting provider.

- Your website is new -> Google takes longer to index new websites. Make sure your internal links are set up correctly.

- Your content isn’t high-quality -> Don’t post thin, duplicate, outdated, or AI-generated content. Follow Google’s Quality Rater Guidelines. Block irrelevant sections (e.g., the comments section) from being crawled in the robots.txt file.

- Your internal links aren’t set up correctly -> Google should see your most important pages linked to frequently, so the bots can prioritize crawling them.

Google can’t crawl everything but ensure it crawls your most important pages.

URL is unknown to Google

Use the URL Inspection tool in the GSC to identify why Google can’t find your pages. Typically, this happens because of:

- Websites and servers blocking Google from crawling your website

- Robots.txt file blocking

- “Noindex” tags

Fix the issue and request indexing.

Crawled - currently not indexed Google Indexing Issue

Google has successfully crawled your website but has chosen not to index it.

First, check that the pages with the coverage status code are worth indexing. For example, Google may choose not to index your comments section or paginated URLs.

Google typically leaves out important pages if:

- Your page shows an expired product -> Deal with unavailable eCommerce product SEO correctly.

- Your URL is redirecting -> It takes a while for Google to recognize a redirect, so it may remove your destination URL while indexing your redirect URL. If it happens often, create a temporary sitemap with the redirect URLs that haven’t been indexed.

- Low-quality content -> If Google thinks you’re duplicating or posting content that’s not unique, it won’t index it. Strengthen the content or use the “noindex” tag on pages that shouldn’t be indexed.

Excluded by ‘noindex’ tag Coverage Status

Not all pages on your website should be indexed.

For example, you don’t want Google to index your paginated pages (/blog/p=57).

You’ll add the “noindex” tag to the irrelevant pages in these cases.

If you’re seeing the “Excluded by ‘noindex’ tag” coverage status, check if the page was supposed to be indexed.

If it was, remove the tag.

Use our noindex check tool to see if the page is marked as noindex.

Alternate page with proper canonical tag in Google Search Console

If two pages on your website have the same canonical URL, you’ll see the “Alternate page with proper canonical tag” Google coverage status. First, sanity-check the status; should Google omit a specific duplicate page from their index?

If not, look into possible causes and fixes:

- If a page shouldn’t be canonicalized -> Update it to point to its own URL.

- If the alternate pages appear because of AMP, UTMs, or product variants -> Check how many alternate pages you’re generating. In eCommerce, you may end up with dozens of URLs for the same page variants. Use best practices for filters and faceted navigation, or block Google from crawling variant URLs by using the # in your URL parameters.

Page with redirect Google Coverage Status

Suppose you recently changed your URLs or meant to redirect visitors to a different page (e.g., an alternative for an out-of-stock product). In that case, the “Page with redirect” coverage status is entirely normal.

However, check and remove any irrelevant redirects if the page shouldn’t be redirected or your visitors have UX issues.

Duplicate, submitted URL not selected as canonical

This is a standard status if you have duplicate pages on your website. However, make sure Google has chosen the correct canonical URL.

If Google’s chosen the correct URL, canonicalize it.

If Google chooses the wrong URL or the page should be indexed separately, strengthen it with unique content. Then, add a canonical URL that points back to the same page.

Submitted URL marked ‘noindex’ in Google Coverage Report

If you didn’t add a “noindex” tag to your website (and you double-checked), it’s not private or blocked from crawling in any way, and you’ve inspected it with the URL Inspection tool, Request Indexing in the URL Inspection.

Duplicate without user-selected canonical

You’ll see this status if Google finds two very similar pages on your website and you haven’t selected which URL is the canonical one.

If the pages are duplicates, select the correct canonical URL and set up a 301 redirect from the other page to the canonical.

If the pages aren’t duplicates, check the following:

- Are your title tags too similar? -> Change your title tags and metadata.

- Does your website automatically generate similar URLs? -> Set clear URL parameters.

- Did you redesign your website? -> It takes a while for Google to catch on. Check back in a bit to give Google time to reindex your website.

Duplicate, Google chose a different canonical than user

If Google thinks one page works better as the canonical one while the other is a duplicate, you’ll see the “Duplicate, Google chose different canonical than user” coverage status.

Inspect the URL and add the canonical URL to the page you think is the better option.

If you don’t have any duplicates, ensure Google is not indexing pages that shouldn’t be indexed (add the “noindex” tag) and make the content on duplicated pages more unique.

Blocked by robots.txt

If your robots.txt file is blocking Google from indexing certain pages, you’ll see this status in the Google Search Console.

First, sanity-check the pages; should they be indexed?

If they should, make sure your robots.txt file isn’t blocking Google. Correct the mistake and request indexing if you’re not explicitly blocking specific page URLs but certain parameters and URL extensions.

Indexed, though blocked by robots.txt

If you intentionally meant to block Google from indexing certain pages, carry on.

If you’re seeing the status for pages that should be indexed, dive into troubleshooting the “Indexed, though blocked by robots.txt” status:

- Are your URLs set up correctly? -> Make sure your URL formats allow the URLs to resolve correctly.

- Check your robots.txt directives -> Make sure you haven’t applied any directives that could unintentionally block your indexable pages (e.g., the noindex directive).

- Check your duplicates -> Implement the correct canonical URLs for any duplicate or variant pages.

Not found (404) Google Coverage Status

If you delete your pages, Google may display the 404 coverage status.

This status is normal, but you can also implement a 301 redirect to other relevant pages (for example, an out-of-stock product with a close variant available).

Blocked due to access forbidden (403)

If your website blocks Google from crawling a page, you’ll see the 403 coverage status. This is normal for pages you don’t want to be indexed (e.g., staging), but if the 403 isn’t intentional, ensure:

- Your themes and plugins aren’t blocking Google’s bots

- Your file permissions are set up correctly -> Everything except wp-config.php should be 755, 750, 644, or 640. Use your cPanel or FTP to adjust the permissions for public files.

- Your .htaccess file is not corrupt -> Regenerate it with a FTP tool.

- You’re not blocking Googlebot IP addresses -> Add Googlebot IP addresses to the Allow list.

Server error (5xx)

If your server can’t process Google’s crawl request, you’ll see a 5xx error. Check your server error logs to identify the specific issue. For example, your server could’ve been overloaded when crawling.

It could be a fluke, so request indexing through URL Inspection for the affected pages.

If the problem persists, get in touch with your hosting provider.

Submitted URL seems to be a Soft 404

A soft 404 tells your visitors the page doesn’t exist while telling the bots that it does exist.

Typically, this happens when you add new content tags to your blog or run an eCommerce store with dynamically generated pages.

If your product goes out of stock, your website could automatically delete the page while it stays indexed on Google, causing a massive UX problem.

Deal with out-of-stock or deleted eCommerce products correctly and implement redirects where needed.

Redirect error in Google Search Console

Typically, this status tells you that your redirects send Google's bots on an infinite redirect loop.

Remove unnecessary redirects and re-submit the page.

Page indexed without content

If Google can't read your page content, you’ll see the “Page indexed without content” coverage status.

However, Google’s team members have said this issue occurs when the robots.txt file blocks Google.

Check if your robots.txt blocks portions of your website content.

Fix Your Google Coverage Status Errors and Rank Higher

You don’t have to worry about every Google coverage status, especially if you’re not dealing with a site-wide issue. However, keep an eye on those pesky 404 and blocked errors for essential pages.

And remember: prevention is better than cure.

Use SEO best practices, keep an eye on your website with SiteGuru, and you’ll skyrocket your rankings!